Search

🧠 Introduction to AI

Neu-Ulm University of Applied Sciences

February 12, 2024

Search

Examples

Agents

Agents that plan ahead by considering a sequence of actions that form a path to a goal state are called problem-solving agents (Russel & Norvig, 2022, p. 81)

- The computational process it undertakes is search

- The representations the agents use are atomic representations1

- There are search algorithms for several environments

Here only simple environments are considered (episodic, single agent, fully observable, deterministic, static, discrete, and known).

We assume that information about the environment are given (e.g., a map)

Process

In simple environments, agents can follow a four-phase-problem-solving process (Russel & Norvig, 2022, pp. 81–82):

- Goal formulation: goals organize behavior by limiting the objectives and hence the actions to be considered

- Problem formulation: the agents devices a description of the states and actions necessary to reach the goal—an abstract model of the relevant part of the environment

- Search: the agent simulates sequences of actions in its model, searching until it finds a sequence that reaches the goal (i.e., the solution)

- Execution: the agent executes the actions in the solution, one at a time

Search problem

Definition

A search problem can be defined formally as (Russel & Norvig, 2022, p. 83):

- The state space: a set of possible states2 the environment can be in3

- The initial state: the state that the agent starts

- The goal state(s): a state that the agent is trying to reach4

- A transition model: \(\textrm{RESULT}(s,a)\) (returns the state \(s'\) that results from performing action \(a\) in state \(s\))

- The successor function: \(\textrm{ACTIONS}(s)\) (returns a set of (action, state) pairs for node \(s\), where the state is the state reachable by taking the action \(a\))

- An action cost function: \(\textrm{ACTION-COST}(s,a,s')\) (gives the numeric cost of performing action \(a\) in state \(s\) to reach state \(s'\))

- A path: a sequence of actions

- A solution: a path from the initial state to the goal state

Modelling

Search tree

Structure

Search algorithms

Definition

Search algorithms take a search problem as input and return a solution, or an indication of failure (Russel & Norvig, 2022, p. 89).

- They superimpose a search tree over the state-space graph,

- form various paths from the initial state, and

- try to find a path that reaches a goal state

They can implement

- uninformed search methods, which only have access to the problem definition but not clue about how close a state is to the goal(s).

- informed search methods, which have access to a heuristic function that gives domain-specific hints about how close a state is to the goal(s) (e.g., using straight-line distance in route-finding problems)

Uninformed search

Greedy best-first search (Russel & Norvig, 2022, p. 103ff)

The Greedy best-first search uses the evlation function \(f(n) = h(n)\)

It expands the first node with the lowest \(h(n)\) value in the queue.

In route finding problems, the straight-line distance heuristic is used as \(h(n)\).

Greedy best-first graph search is complete in finite state spaces, but not in infinite ones.

A* Search (Russel & Norvig, 2022, p. 103ff)

The A* search (pronounced “A-star search”) uses the evaluation function \(f(n) = g(n) + h(n)\)

- \(g(n)\) is the path cost from the initial state to node \(n\) (e.g., computed by using the action-cost function)

- \(h(n)\) is the estimated cost of the shortes path from \(n\) to a goal state

A* search is complete, if it is cost-optimal depends on the heuristic

Online search

The agents considered so far use offline search algorithm. They compute a complete solution before taking their first action. They are not helpful in unknown environments.

Online search agents interleaves computation and action:

- Takes action,

- observes the environment, and

- computes the next action,

These agents can discover successor only for a state that is occupied or that is learned (i.e., contained in a map created online)

Online search is a good idea in dynamic or semi-dynamic environments.

Competitive environments

Tree search in action

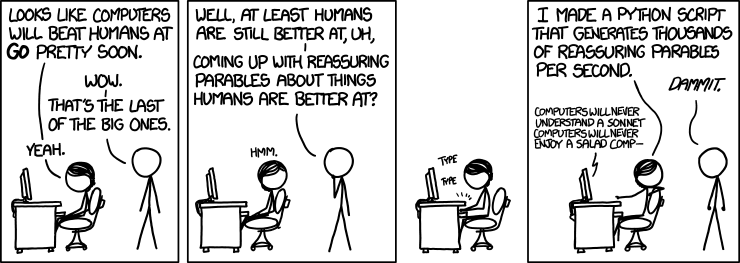

AlphaGo is a computer program that plays the board game Go, which is considered much more difficult for computers to win than other games such as chess because its strategic and aesthetic nature makes it difficult to construct a direct scoring function. AlphaGo was developed by the London-based DeepMind Technologies, an acquired subsidiary of Alphabet Inc.

AlphaGo use a Monte Carlo tree search algorithm to find their moves based on knowledge previously acquired through machine learning, specifically an artificial neural network (a deep learning method) through extensive training, both from human and computer games. A neural network is trained to recognize the best moves and the winning rates of those moves. This neural network improves the strength of the tree search, leading to stronger move selection in the next iteration.

Adversarial search

In competitive environments, two or more agents have conflicting goals.

This gives rise to adversarial search problems.

The AI community is particularly interested in games of a simplified nature (e.g., chess, go, and poker), as in such games

- the state of a game is easy to represent,

- agents are restricted to a few actions, and

- effects of actions are defined by precise rules.

Deterministic games

The games most commonly studied within AI are deterministic (two-player, turn-taking, fully observable, zero-sum8) (Russel & Norvig, 2022, p. 192).

Possible formalization

- States: \(S\) (start at \(S_0\))

- Player: \(\textrm{TO-MOVE}(s)\) (defines which player has the move in state \(s\))

- Actions: \(\textrm{ACTIONS}(s)\) (the set of legal moves in state \(s\))

- Transition model: \(\textrm{RESULTS}(s,a)\) (defines the state resulting from action \(a\) in state \(s\))

- Terminal test: \(\textrm{IS-TERMINAL}(s)\) (is true when the game is over9)

- Utility function: \(\textrm{UTILITY}(s,p)\) (defines the final numeric value to player \(p\) when the game ends in terminal state \(s\))

The initial state, \(\textrm{ACTIONS}(s)\), and \(\textrm{RESULT}(s,a)\) define the state space graph, where the vertices are states, the edges are moves and the state might be reached by multiple paths.

Optimal decisions

Players (here MIN and MAX, alternate turns) need to have a conditional plan—a contingent strategy specifying a response to each move of the opponent.

- For games with binary outcomes (win or lose), AND-OR10 search tree can be used

(see Russel & Norvig, 2022, p. 143) - For games with multiple outcome scores, the minimax search algorithm is used

Minimax search

Minimax value

Given a state-space search tree, each node’s minimax value is calculated.

The minimax value is the best achievable utility of being in a given sate (against a rational adversary).

MAXprefers to move to a state of maximum value

MINprefers to move to a state of minimum value

Adversarial game tree

The △ nodes are “MAX nodes”, in which it is MAX’s turn to move; the ▽ nodes are “MIN nodes”. MAX’s best move at the root is α₁ (the highest minimax value), MIN’s best move is β₁ (the lowest minimax value).

Minimax search algorithm

The minimax algorithm performs a complete depth-first exploration of the game tree (Russel & Norvig, 2022, pp. 196–196).

- Assumes that the adversary plays optimal

- Returns action whose terminal state has the optimal \(\textrm{MINIMAX}\) value

- If the state is a terminal state (\(\textrm{IS-TERMINAL}(s) = true\)):

return the state’s utility (\(\textrm{UTILITY}(s,p)\)) - If the next agent is \(\textrm{MAX}\) (\(\textrm{TO-MOVE}(s) = MAX\)): return \(\textrm{MAX-VALUE}(s)\)

- If the state is a terminal state (\(\textrm{IS-TERMINAL}(s) = true\)):

The exponential complexity makes the miminmax algorithm impractical for complex games (even with alpha-beta pruning applied; chess game tree size > atoms in the universe).

Pruning to reduce computing complexity (Russel & Norvig, 2022, pp. 198–199).

Pruning stops the search at the moment when it is determined that the value of a subtree is worse than the best solution already identified.

The general principle is as follows: consider a node n somewhere in the tree, such that Player has a choice of moving to n. If Player has a better choice either at the same level or at any point higher up in the tree, then Player will never move to n. So enough about n is found out (by examining some of its descendants) to reach this conclusion, it can be pruned (Russel & Norvig, 2022, p. 198).

Alpha-beta pruning gets its name from the two extra parameters in MAX-VALUE(state,α,β) that describe the bounds on the backed-up values that appear anywhere along the path:

- α = the value of the best choice for

MAXfound so far (“at least”) - β = the value of the bast choice for

MINfound so far (“at most”)

Alpha-beta search updates the values of α and β as it goes along and prunes the remaining branches at a node as soon as the value of the current node is known to be worse than the current α and β for MAX or MIN, respectively.

Pruning

What is pruning?

Read the note about pruning (and consult Russel & Norvig (2022) or the internet if necessary).

Explain in your own words, under what conditions a subtree is skipped using Alpha-beta pruning.

Draw an example (game search tree, 3 levels depth).

Monte Carlo tree search

Monte Carlo tree search (MCTS)11 does estimate the value of a state as the average utility12 over a number of simulations of complete games starting from the current state (Russel & Norvig, 2022, pp. 207–209).

Each iteration follows four steps:

- Selection (choosing a move13, leading to a successor node, and repeat that process, moving down the tree to a leaf)

- Expansion (one to several new children are created for the selected node)

- Simulation (a simulation for the newly generated child node is performed)

- Back-propagation (the result of the simulation is used to update all the search tree nodes going up to the root)

Repeats for a set number of iterations or until a given time is over. At the end the successor with the hightest utily is chosen.

Function

To what generic function of agents does MCTS relate to?

It is the utility function that measures its preferences among states of the world.

Function

What should the selection process look like?

Selection

Figure 5 shows a tree with the root representing a state where P-A has won 37/100 playouts done

P-Ahas just moved to the root nodeP-Bselects a move to a node where it has won 60/79 playouts; this is the best win percentage among the available movesP-Awill select a move to a node where it has won 16/53 playouts (assuming it plays optimally)P-Bthen continues on the leaf node marked 27/35- … until a terminal state is reached

It would also have been reasonable to select the 2/11 node for the sake of exploration—with only 11 playouts, the node still has high uncertainty in its valuation, and might end up being the best option if more information about it is gained. So it makes sense to use a selection policy that balances exploitation and exploration.

Selection policy example

Upper confidence bounds applied to trees (UCT) is a very effective selection policy ranking possible moves based on an upper confidence bound formula (UCB1)

\[ \textrm{UCB1}(n) = \frac{\textrm{U}(n)}{\textrm{N}(n)} + C * \sqrt{\frac{\log{\textrm{N}(\textrm{PARENT}(n))}}{\textrm{N}(n)}} \]

- \(\textrm{U}(n)\) is the total utility of all playouts that went through node \(n\)

- \(\textrm{N}(n)\) is the number of playouts through node \(n\)

- \(\textrm{PARENT}(n)\) is the parent node of \(n\) in the tree

- \(\frac{\textrm{U}(n)}{\textrm{N}(n)}\) is the average utility of \(n\) (exploitation term, “how good are the stats?”)

- \(\frac{\log{\textrm{N}(\textrm{PARENT}(n))}}{\textrm{N}(n)}\) is higher for \(n\) only explored a few times

(exploration term, “how much has the child be ‘ignored’?”) - \(C\) is a constant that balance exploitation and exploration (theoretically \(\sqrt{2}\))

With \(C=1.4\), the 60/79 node in Figure 5 has the highest UCB1 score, but with \(C=1.5\), it would be the 2/11 node.

Utiliy of MCTS

The conventional wisdom has been that Monte Carlo search has an advantage over heuristic alpha-beta tree search (not discussed here, see (Russel & Norvig, 2022, p. 202ff)) for games where the branching factor is very high (and thus alpha-beta can’t search deep enough), or when it is difficult to define a good evaluation function 14.

Expansion and simulation

Figure 6 shows a tree where a new child of the selected node is generated and marked with 0/0 (expansion).

Back-propagation

The result of the simulation is used to update all the search tree nodes going up to the root.

P-B'snodes are incremented in both the number of wins and the number of playoutsP-A'snodes are incremented in the number of playouts only

Wrap-up

Search

Search operates over models of the world (which might be observed online)

- Agents do not try all possible plans

- Planning is all “in simulation”

- Search is only as good as the models are

Adversarial search

- In two-player, discrete, deterministic, turn-taking zero-sum games with perfect information, the minimax algorithm can select optimal moves by a depth-first search in the game tree

- Efficiency can be improved by using the alpha-beta search algorithm, which eliminates subtrees that are shown to be irrelevant.

- Monte Carlo tree search evaluates states by playing out the game all the way to the end to see who won. This playout simulation is repeated multiple times. The evaluation is an average of the results.

xkcd

✏️ Exercises

Definition sequence

Problem formulation must follow goal formulation.

Discuss that statement. Is it true or false? Why?

Problem formulation

Give a complete problem formulation for each of the following problems. Choose a formulation that is precise enough to be implemented.

- There are six glass boxes in a row, each with a lock. Each of the first five boxes holds a key unlocking the next box in line; the last box holds a banana. You have the key to the first box, and you want the banana.

- There is an n x n grid of squares, each square initially being either unpainted floor or a bottomless pit. You start standing on an unpainted floor square, and can either paint the square under you or move into an adjacent unpainted floor square. You want the whole floor painted.

Maze

Your goal is to navigate a robot out of a maze. It starts in the center of the maze facing north. You can turn the robot to face north, east, south, or west; direct the robot to move forward a certain distance (it will stop before a wall).

- Formally define this problem as a search problem. How large is the state space?

- In navigating a maze, the only place we need to turn is at the intersection of two or more corridors. Reformulate this problem using this observation. How large is the state space now?

- From each point in the maze, we can move in any of the four directions until we reach a turning point, and this is the only action we need to do. Reformulate the problem using these actions. Do we need to keep track of the robot’s orientation now?

- In our initial description of the problem we already abstracted from the real world. Name three such simplifications we made.

Open only if you need help.

Concepts

Explain in your own words the following terms:

- Zero-sum

- Terminal test

- Minimax value

- Selection policy

- Playout policy

- Monte Carlo tree

- Back-propagation

MINIMAX

Explain if the MINIMAX algorithm is complete and optimal.

Can it be beaten by an opponent playing suboptimally? Why (not)?

Come up with a game tree in which MAX will beat a suboptimal MIN.

Open only if you need help.

In two-player, discrete, deterministic, turn-taking zero-sum games with perfect information, the MINIMAX algorithm can select optimal moves by a depth-first emuration, the algorithm is also guaranteed to find a solution when there is one.

The algorithm performs a complete depth-first exploration of the game tree. If the maximum depth of the tree is \(m\) and there are \(b\) legal moves at each point, then the time complexity is \(O(b^m)\) for an algorithm that generates all actions at once, or \(O(m)\) for an algorithm that generates actions on at a time. The exponential complexity makes MINIMAX impractical for complex games. MINIMAX does, however, serve as a basis for the mathematical analysis for games. By approximating the minimax analysis in various ways, we can derive more practical algorithms.

If MIN does not play optimally, then MAX will do at least as well as against an optimal player, possibly better.

Pruning

Read the note about pruning (and consult Russel & Norvig (2022) if necessary).

Explain in your own words, under what conditions a subtree is skipped using Alpha-beta pruning.

Draw an example (game search tree, 3 levels depth).

Literature

Footnotes

An atomic representation is one in which each state is treated as a black box with not internal structure, meaning the state either does or does not match what you’re looking for.

A state is a situation that an agent can find itself in.

Expressed by a graph whose nodes are the set of all states, and whose links are actions that transform one state into another

Can be a single goal state, a small set of alternative goal states, or a property that applies to many states (e.g, no dirt in any location)

first-in-first-out queue first pops the node that was added to the queue first

last-in-first-out queue (also known as a stack) pops first the most recently added node

first pops the node with the minimum costs according to \(f(n)\)

Zero-sum means that what is good for one player is just as bad for the other: there is no “win-win” outcome

States where the game has ended are called terminal states

The search tree consists of AND nodes and OR nodes. AND nodes reflect the environment’s choice of action (which all need to be considers), OR nodes the agent’s own choices in each state (where only one needs to be considered). AND and OR nodes alternate. A solution is a conditional plan that considers every nondeterministic outcome and makes a plan for each one.

guided by the selection policy

For games with binary outcomes, average utility equals win percentage

A heuristic evaluation function returns an estimate of the expected utility of state \(s\) to player \(p\).

Playout policies biases the moves toward good ones. For Go and other games, playout policies have been successfully learned from self-play by using neural networks. Sometimes also game-specific heuristics are used (e.g., take the corner square in Othello)